Man and Machine, May the Lord Save: A. An Initial Look at a Computer (Column 694)

With God’s help

Disclaimer: This post was translated from Hebrew using AI (ChatGPT 5 Thinking), so there may be inaccuracies or nuances lost. If something seems unclear, please refer to the Hebrew original or contact us for clarification.

In columns 35 and 175 I discussed the definitions of intelligence and judgment. There I pointed out the difference between us and other creatures or objects that cannot be regarded as intelligent because they do not exercise judgment but simply behave according to their nature. My claim was that a dimension of judgment is lacking there, and there is nothing beyond mechanical calculation. In columns 590–592 (and also in an interview with me here) I discussed the status of AI models from two perspectives: whether there is anything we can do that they cannot, and to what extent they can be seen as human (that is, whether there are essential differences between them and us, irrespective of differences in capabilities). A few days ago I saw a video showing step-by-step computation by a DeepSeek model that has just entered the market, and it opened a door for me to an interesting aspect of this issue that connects those two discussions. All this concerns the question of whether the machine is a person, and therefore I wanted to touch on it again here. As it happens, right while I was writing I received questions from a former student that dealt with the opposite question: is a human a machine?

It ended up becoming a series of three columns. In this column I describe the problem in general and go into a description of how a regular computer works. In the next column I will address neural networks and there I will compare them with classical computation as described here. Some technical understanding is needed in order to clarify the fundamental claims, and for those unfamiliar with the field I have added schematic and simple descriptions of how these machines operate. Although I am certainly not an expert, I hope I have not erred too much. In any case, I think this is a good opportunity for those who are not familiar to gain such acquaintance. The third and final column will deal with the reverse question.

The Basic Argument

We can present the starting point of the discussion through two thought experiments, and therefore, although they have already been discussed here in the past, I will briefly repeat them. The first experiment was proposed by Alan Turing, who suggested a test to determine when a program can be considered a person: if I sit in a room facing two computer screens—one connected to a person and the other to a program—if I converse with both and cannot tell which one is a person and which is a computer, then that computer can be considered a person. Turing’s experiment tests capabilities; that is, it is a test that shows us when the computer can be regarded as doing what an intelligent human does.

A further step in the discussion is John Searle’s Chinese Room, which looks similar but illustrates a different point. Imagine a person who does not speak Chinese sitting in a room with two windows and next to him an infinite barrel of Chinese characters. From one window, questions written in Chinese are inserted to him, and from the other window he outputs answers written in Chinese that he assembles at random from the characters in the container beside him. Every time his answer does not match the question he receives a painful electric shock and tries again. Searle argues that if he has infinite time, he will eventually speak Chinese. In other words, he will pass the Turing test.

The Chinese Room does not examine the definitions of a person or of thinking, but the definition of understanding. The training that this person undergoes is easily understood today, since it very much resembles the training regimes used with the new AI programs. In the next column we will see that this is precisely how they are trained, and in the end they do converse with us as in a normal conversation that we have with human beings. Searle’s experiment is now carried out in thousands of places around the world, again and again, and it is indeed increasingly successful. The conclusions are that it is indeed possible to train a program to achieve human conversational ability and to pass the Turing test. But a question still remains, the one raised by the Chinese Room: does that person (or the computer that passed the Turing test) understand Chinese? The assumption is that being human involves understanding and not merely correct use of language. The conclusion is that although we have no clear answer to the question of whether there is nothing we can do that a (contemporary) machine cannot, today’s machines easily pass the Turing test. It is important to understand that this is not merely a question of human capabilities. Even assuming the machine passes the Turing test and conducts an intelligent conversation like a person, we can still ask whether it “understands” what is being discussed between us, or whether this is merely a technical imitation (the well-known film about Turing is called “The Imitation Game”) of human actions, with no understanding or consciousness behind it. It is hard to call such a thing understanding and/or conversation. It is hard to say that the person in the Chinese Room truly knows Chinese. At most, this is a technical operation of swapping words or imitating Chinese speakers. I do not say this because the machine’s ability is inferior to ours as human beings (it is not), but because of the nature of its operation.

Wittgenstein on Meaning and Use

In my conversation with Sarel Weinberg, the issue of meaning and use from Wittgenstein’s school came up. Wittgenstein argued that one should not distinguish between the meaning of a statement and its use; that is, if we know how to use it, that means we understand it. This was of course said long before Searle and probably even before Turing, but his words have greatly influenced thinking to this day, and revisiting them is now called for after the rise of the new AI models. From my description so far, it is clear that these models know how to use, but do not really understand.

Moreover, in that same conversation I also said that in my view even Wittgenstein himself did not truly identify meaning with use. Early Wittgenstein ends his Tractatus Logico-Philosophicus with the famous sentence: “Whereof one cannot speak, thereof one must remain silent.” In other words, he claims there is a stratum of meaning beyond use, except that we have no way to formulate it as such and to discuss it (somewhat similar to the thing-in-itself, Kant’s noumenon). Wittgenstein merely asserts that all we can talk about is use and description, not meaning. Meaning is the thing described, and therefore by definition we have no way to speak about it. But that does not mean there is no such thing. On the contrary, use indicates meaning, and not—as his words are sometimes simplistically taken—that it is meaning. Later Wittgenstein (in the Philosophical Investigations) was even less analytic and positivist, and therefore there it is even more plausible that in his view there is a stratum of meaning beyond use. I think his well-known “following a rule” argument (see more in column 482, among others) illustrates this well.

In fact, this is not even a matter for debate. There is no such thing as use without representing meaning. The rules of use reflect meaning, and without that we could not act according to them. Brisker-style scholars like to say that we do not ask “why?” but only “what?”. That is, we merely describe and do not attempt to understand. This is, of course, utter nonsense. There is no such thing as asking only “what?” without “why?”. The “what” expresses conceptions regarding the “why.” In logic we distinguish between two levels in language (formal or not): semantics and syntax. Syntax is the formal structures of the language and they determine its use. Semantics is the meaning of things. There is supposed to be a fit between semantics and syntax (there are theorems in logic about soundness and completeness of the relation between the two), since the latter expresses the former.

Returning to the Chinese Room, that person uses the Chinese language without any understanding behind it. It would seem that the two can be detached. That is indeed what happens with computers as well. But does that mean that this is our situation too? Or alternatively, can we infer from this comparison that a computer is also a person?

The Comparison to Human Beings

Assuming the machine’s abilities in every domain are not inferior to human abilities, why is there room to debate whether we are dealing with a person or not? As I explained, what is missing is the mental array behind the conversation, like the cognitive understanding behind the exchange of Chinese sentences. The understanding and meaning (semantics) underlying the use (syntax). The fact that the machine imitates human beings—even if it does so with great success—does not mean that it is necessarily a person. The argument that uses this to prove that the machine is a person reminds me of a nasty joke at the expense of Hasidism: why can one not dress up as a Rebbe on Purim? Because if you put on royal garb, are accompanied by two burly attendants in long frock coats, and ride in a Mercedes—then you really are a Rebbe (and not merely someone dressed up as one). This claim essentially says that there is no content behind “Rebbishness.” It is a façade that does not express an essence. So too with the person in the Chinese Room or Turing’s machine: we are dealing with a façade that imitates human beings, but there is no essence behind it. Thank God that human beings are not Rebbes, and therefore with respect to them there can indeed be a difference between the imitation and the original.

So why are there nevertheless many who think that machines (in principle, even if not necessarily the machines that already exist) can be a kind of person, even if made of metal? This, of course, depends on our conception of the human being’s uniqueness and added value. One can raise several different lines of reasoning in favor of this, and there is always a counterargument alongside them. I will present three such lines here:

- The claim: Our consciousness is not essential to our being human. Capabilities are what matter and what give us the human added value. Therefore, when a machine has those capabilities, it deserves to be considered a human being.

And the rebuttal: This claim is very strange, since in this sense water and air also have capabilities. I explained that water “solves” very complex equations that humans cannot approach solving. The flow of water is described by solutions that our mathematical abilities cannot arrive at. Does that mean water is a person? What is the principled difference between a computer and water? And if you prefer animals—think about a bird’s navigation ability. Is it correct to infer from this that it has intelligence greater than ours? Absolutely not. It merely does what is in its nature and does not truly solve problems—just like water. In the columns mentioned above I explained that such abilities are not intelligence.

- The claim: There is no human added value at all. A human is like an animal or a machine, and there is no reason to distinguish between them despite the differences (if there are any essential differences at all). The “mehadrin” of this approach will say that a human has no mental dimension at all, and his behavior is nothing but a collection of technical computational operations. A human is essentially a kind of machine of flesh and blood.

And the rebuttal: Beyond the moral claim that sees uniqueness in human beings, I have often addressed the strange mistake of materialists who identify mental acts and states—like love, fear, solving equations, remembering, and so on—with electrical currents in the brain. See, for example, column 593 describing my conversation with Yosef Neumann.

- The claim: Machines that have reached human capabilities have likely developed awareness and consciousness (that is, a mental dimension similar to ours), and therefore they have essentially become human like us.

And the rebuttal: The (strange) assumption underlying this approach is that awareness is nothing but a product of capabilities and not something else that is independent. Therefore, whoever has human capabilities must also have consciousness and mental dimensions like human beings. Incidentally, from this one could draw the conclusion that humans with especially diminished abilities might not be “human” in this sense. In a milder formulation: there is a possibility that consciousness has developed there, even if it will not necessarily happen. Theoretically this could be true, but it is highly implausible. Why should a computer develop consciousness, but a tree or water not? And perhaps all things indeed have a mental dimension? (See a bizarre discussion in this direction in column 690.)

From this one can also derive that it is possible to define love (in the romantic, essential sense) toward a machine (see the film Her), that we should be concerned for its rights, and the like. A few weeks ago I read an article (I cannot find it now) that argues for our obligation to care for the rights of AI programs. This is admittedly somewhat unhinged esoterica, and of course the prevailing view is not like that (there are some eccentric AI researchers or futurists like Ray Kurzweil who enjoy speculations—usually very foolish and far-fetched—that ostensibly challenge our thinking). See for example this pamphlet and also here, where you can see that the simple assumption is that there is no such thing, and a machine cannot be considered a person. The discussion there is only about how to protect human rights against foreseeable harms from AI models.

So far these are matters I have already addressed in the past. To understand the next point we need to recognize another step that AI models have taken very recently. But before that I will preface a schematic description of how computers operate in general. In the next column I will move to these new models as a continuation of the evolution that the computer has undergone until it reached the point where people wonder whether it should be seen as a person. I apologize in advance to the cognoscenti who are knowledgeable in computers (surely more than I am), but this description is needed in order to form a position on these issues.

What Is a Computer?

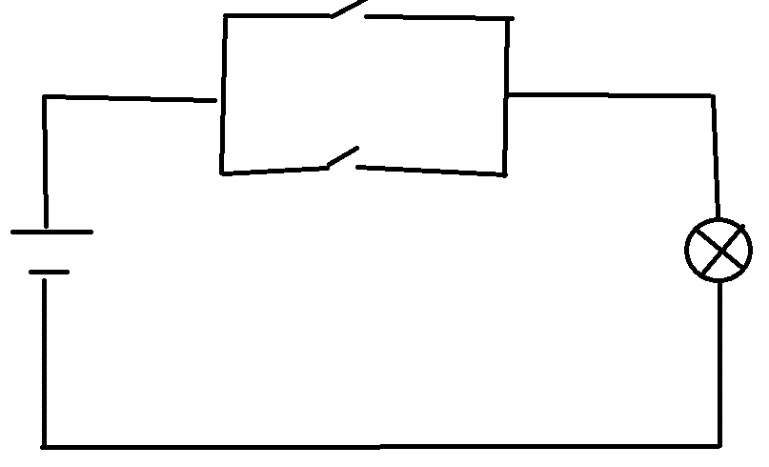

To understand what a computer actually is, I will use simple models that describe the essence of its operation. Suppose we want to build a machine that knows how to add two numbers. For simplicity, we will build a machine that adds 0 and 1; that is, a machine that receives as input the pair of numbers 0 and 1 and outputs 1. Assume that the numbers 0 and 1 are represented by an electrical potential of 0 or 5 volts. A computer that performs this operation is an electrical circuit that receives as input on one wire 0 volts and on another 5 volts, and ensures that the bulb at the output lights up. This suffices to understand the principle, but for those who are a bit familiar, here is a simple electrical circuit that performs this operation:

On the left there is a 5-volt battery. On the right there is a lamp that lights if the electrical circuit is closed with the battery. In the two branches of the upper line there are switches. The inputs go into the switches, so that when the input to a switch is 1 (5 volts) the switch is closed (connected), and when the input is 0 the switch is open (disconnected). The connection here is called a “parallel connection,” and in such a connection, for the circuit to close and the lamp to light it suffices that at least one of the two switches be closed (or both). You understand that if the input is 1 and 0, then the switch for the one will close the circuit while the other remains disconnected. The result will be 1 (the lamp will light). This seems almost banal, but note that this is essentially a very primitive computer. This computer performed the operation 1+0, but it also correctly performs two more addition operations: 0+1 and 0+0. If you check, you will see that the result comes out correctly. But the operation 1+1 will yield a lit bulb, that is, 1, whereas the result is 2 (i.e., 10 in binary). Therefore, this “computer” is not a full adder even for the restricted set {0,1}. It performs three out of the four addition operations.

If we want to build a computer that performs full addition, we must ensure that it outputs the correct result for every input. Moreover, if we want addition of numbers of any length (not just a single digit), we must create a series of adders like the one described here, which will perform full addition at each digit separately with carry propagation. There is no room here for details, but in light of what we have seen it is clear that in principle there is no problem doing this. We should remember that any number can be represented by a sequence of 0s and 1s (this is called the binary base), so one can build a circuit that receives two numbers of any length and adds them together; that is, it outputs the correct result for their sum (a series of lamps that are on or off in a way that produces a sequence of 0s and 1s representing the result number). This is already a more sophisticated computer that knows how to add numbers.

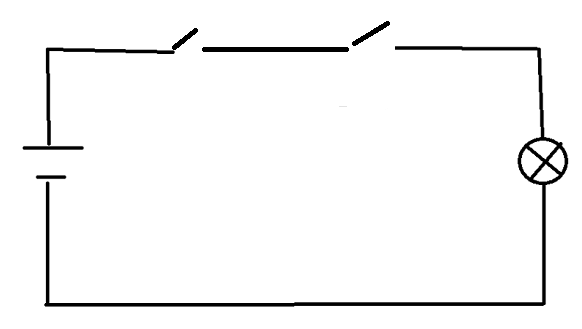

In the same way one can build a computer that performs additional operations, like multiplication, division, exponentiation, and the like. Here is, for example, a computer for performing multiplication. The following electrical circuit performs precisely the operation of multiplying any two digits from {0,1}:

Here the switches are connected in series, and therefore the lamp will light only when both inputs are 1. Indeed, the product in all the other cases is 0.

Now think of a computer that does both multiplication and addition. It will have to be built from different units that perform those two operations. And so for additional operations as well. Performing many operations will require more circuits of this kind, but this is only a matter of complexity. The principle is the same.

There are, of course, also non-arithmetic operations that can be performed similarly. For example, a computer that plays chess must be built so that it receives a board position as input and outputs a move as output. If one assigns a number to each piece and to each square, every board position can be represented by some number, and the task becomes outputting a numerical result in response to the existing number. That will give us a new position (this is, of course, an insanely complicated way and it is not really reasonable to build a chess-playing computer this way). But in principle even these circuits can be added to our computer, which now can both compute mathematical calculations and play chess.

What I have described so far is a full-fledged computer, even if primitive. But it is important to understand that in practice our computers are not actually built this way. In the description here, every operation required different hardware, that is, a separate circuit that performs it. A modern computer is built so that the same device performs very many different operations. Building it that way would be very wasteful. It would require billions of different circuits built in different ways, each designed to perform a different operation. A computer of the type I have described here cannot perform an operation not embedded in its construction; if we want the same device to perform many operations, including those we did not embed in its structure beforehand, we need a different logic.

The logic of a digital computer is different. It has one general structure (this is its hardware), and the difference between different operations is achieved by different programs. For each operation one can write a program responsible for performing it. The program gives different instructions to this general hardware in order to perform the operation for which it is responsible. There is a program for addition, for multiplication, for chess, and so on, and they all use the same hardware structure. Thus the division of labor between software and hardware is created. Note that we are using the same computer, but each operation requires running a different program. Therefore, we are still dealing with a different “device” for each operation—except that the differing device is the hardware and software together.

A computer program consists of a set of instructions the computer receives whose purpose is to perform a defined operation. How to do this (the algorithm) is the programmer’s concern. He writes the series of instructions he designs in order to achieve the desired result. In this sense, the programmer is the one who solved the problem, and the computer merely follows the instructions it receives from the program. This is completely analogous to the creator of the electrical circuit described above—he is the one who solved the problem, not the computer. The difference between that primitive computer and a sophisticated digital computer is not essential for our purposes.

So far, a very schematic and superficial description of a digital computer. Now we can make two important observations about it.

Two Features of Digital Computers

Two points important for our purposes, and they are true even for the most sophisticated digital computers:

- In all these situations, the solution to the problem is inserted into the system by whoever builds the machine (or wrote the program). He has already solved the problem in advance (in the example above, he knows the result of adding the various numbers) and builds the machine so that it will actually produce (implement) his solution. The machine does not solve anything by itself. So why build such machines at all if they contain nothing beyond what we have? Because the task of adding large numbers can be done by it very quickly, which is very difficult and time-consuming for the programmer. The machine does it on the basis of the programmer’s knowledge. What the programmer has brought into the game is knowledge about adding two digits and knowledge of how to assemble digit-wise additions into the addition of two large numbers. But for a specific exercise, say 2956*(149530+250611), the programmer might be able to do it, but it would take him a long time. The computer will do it in milliseconds—but all on the basis of the programmer’s knowledge embedded in it. This is the added value of such a computer.

- The computers I described do not know what 0 is or what 1 is. They manipulate electrical voltages, and we (the users) are the ones who translate them into the language of numbers. The computer also does not know what addition is or what a result is. It is not, in fact, truly performing an addition. The computer merely shuttles electrical currents from here to there—and that’s it. The interpretation of what is happening there—that is, the understanding that the electrical input represents two digits, the electrical output represents a result, and that an addition operation is being performed—all of this resides with the user and not in the computer itself. The computer is a hunk of metal, not essentially different from the electrical circuits in my home.

Can a Digital Computer Be Considered a Person?

In light of what I have described so far, can anyone imagine regarding such a digital computer as a person? Are the electrical circuits in my home a person? The simple machines shown in the diagrams above are certainly not a person. These are mechanical contraptions—products of the designer’s and the programmer’s knowledge and planning. They do not think or understand; they merely conduct currents. The thinking and the meaning of the things are obtained only by the user. I do not think anyone would disagree that a battery with two switches and a lamp is not a person.

What does a digital computer have beyond the simple circuit I described? The computer is nothing but a collection of a great many electrical circuits that perform many different operations and respond to the instructions of the program inserted into them. Suppose this complex collection—capable of occupying the area of an entire country or even the whole globe—can conduct an intelligent conversation with me and successfully pass the Turing test. Would anyone consider such a thing a person? Such a computer is not essentially different from the simple circuit shown above, apart from higher complexity and intricacy. It simply contains many circuits of that sort. Even if we move to the modern and more sophisticated form—the one that uses the same hardware and performs different operations by different programs—would that be called a person? It seems to me that in light of what I have described it is very hard to accept Turing’s thesis. The fact that all the operations are carried out by the same machine does not make it a person. That is a technological difference, nothing more.

What would you say if we translated this computer’s output so that instead of writing or lighting bulbs we caused it to speak the result (via a microphone and a speaker that produce speech sounds)? And if we also gave it a human shape? This human-shaped computer would receive a mathematical problem and respond in words “8” (the result it computed). Would that make it a person? Unlikely. This is a talking machine in human form. In fact, it does not even speak; it merely emits sounds. Speech is not merely producing sounds, but producing sounds that represent and convey mental content found in the minds of the two speakers. In the golem I have described there is no mental dimension and no intellect. The represented content is not inside it—only the representation (digits and letters) is. As I explained, the mental content that the operations and results represent lies only in the user’s mind and is not connected to the computer itself. Here we have a representation without a thing represented.

We are used to saying that something red inside, green outside, with watermelon seeds is most likely a watermelon. Likewise, what quacks like a duck and walks like a duck is a duck. Well—no. Our machine “speaks” like a person (but does not truly speak) and computes like a person (but does not truly think), and it is not a person. A golem that moves its lips and writes exactly what a person would write in such a situation is still a golem—a golem that imitates human beings (like the “Rebbe” above). A plastic watermelon that is red inside and green outside and even if you put watermelon flavor in it so that I taste it when I eat it—that does not make it a watermelon.

On this occasion, allow me not to restrain myself and direct you to an amazing video that was sent to me a few days ago.

I have not recovered from it to this day. The video depicts a quasi-evolutionary process of artificial “creatures” (called Strandbeests), which are nothing but an assemblage of rods connected to one another by various joints. These creatures move and fly just like animals. They also undergo an evolution that helps them cope with the environment (sand, winds, water, and the like) and survive. At some point he even creates for them a primitive, simple brain (with mechanical neurons—this stage is truly unbelievable) that can make movement decisions and execute them. Would you regard this creature as a living being? Watch the video and you will see that the answer is clearly negative. To my mind, regarding a digital computer as a person is akin to treating a Strandbeest as a living creature.

In the next column I will deal with a new type of machine: neural networks, and with their contribution to clarifying the issue of human and machine.

Your analogy to the person in the Chinese room is indeed correct, but it is important to emphasize that there is no issue of pain for the model here. When training the model, all you are trying to do is reach a minimum of a problem formulated as a function using the data you have, so that the more accurate your model is, the lower the function returns. All you do beyond that is reach a minimum of the function (using GD). The model does not really suffer or feel pain. It is important to emphasize this.

A point you did not address: What is the brain if not a biological computer? Admittedly, the analogy between artificial neural networks and the human brain is very loose at best, but if we were to imagine in our minds a 3D printer that prints accurately down to the atomic level and provides it with the right biological materials while maintaining the right environment, what is stopping it from printing the human brain? And if nothing else, what is the difference between the human brain and a metallic computer? True, the human brain is not a real computer that works on 0/1, but it is a biological computer, with its own learning algorithms, with its own shortcomings (human RAM, for example, is ridiculous), and with its own biases. How far-fetched is it to imagine the human brain as a computer that interprets certain signals as unpleasant and other signals as pleasant and acts accordingly? In any case, why do you think it is impossible to develop another computer, perhaps biological, perhaps a combination of iron and flesh, that can sufficiently simulate the things they (as a biological computer yourself) read pain, awareness, understanding, to a level that reaches the human level?

I agree that artificial intelligence today does not meet the human criteria formulated within the paradigm of the biological computer that is our brain, but why do these criteria have any objective priority, and why (and whether) in your opinion, other computers, not necessarily based on 0/1, will never be able to reach a state similar enough to human existence so that they will practically perform what you call: understanding, knowledge, awareness, emotions, etc.

It seems to me that I have rambled too much and I am not sure that I formulated a coherent enough question (or at all). You have indeed chosen an interesting topic for us this time. I would be happy if you could respond to anything you choose to do 🫏

I pinch myself to make sure I didn't dream this message. I'm making a big announcement to the Oriyta: openAi received permission from the Helsinki Committee to abuse their software. This is a really important comment.

Do you know how many points I haven't addressed in this column yet? Incidentally, assuming you read the column, I wrote that in the third column I would address the question of whether a person is a machine. Brain printing doesn't seem relevant to this question to me.

Perhaps your last comment explains this strange message.

Always the same response from the stinging Miki. A bit mean. There is a person here who expresses himself only from pure motives, while you have to convey your message in an insulting way. I like reading what you write, but why make this environment toxic?

Agreed

Sometimes the Rabbi exaggerates a bit

Try to judge him fairly

But that's the thing. The brain is a biological computer but a person (who has a brain) has a soul – attached to the brain – in which mental processes occur in parallel with the mechanical-biological brain activity. If you print a brain then you will have a machine again but without a soul attached to it. In other words, the one who thinks is our soul and not the brain which is just a (wonderful) machine. The brain is the place where thought (and calculation) takes place or the tool through which thought is carried out but the one who thinks and calculates is the soul (i.e. the person).

When disagreements begin in artificial intelligence systems, between unbelievers in God and believers, I will know that artificial intelligence has reached a human level.

I fear that the division between infidels and believers is itself the division between artificial intelligence and humans…

Thank you very much for the fascinating column.

For those demanding rights to AI software, see more at this link.

https://www.tecnobreak.com/en/engineer-says-google-ai-hired-its-own-lawyer/amp/

I noticed that the link doesn't work so see here: https://www.businessinsider.com/suspended-google-engineer-says-sentient-ai-hired-lawyer-2022-6

and https://www.youtube.com/watch?v=LXAsqWsfj8Y

and https://nypost.com/2022/06/24/suspended-google-engineer-claims-sentient-ai-bot-has-hired-a-lawyer/

And also on Wikipedia: https://en.wikipedia.org/wiki/LaMDA

and https://en.wikipedia.org/wiki/Artificial_consciousness